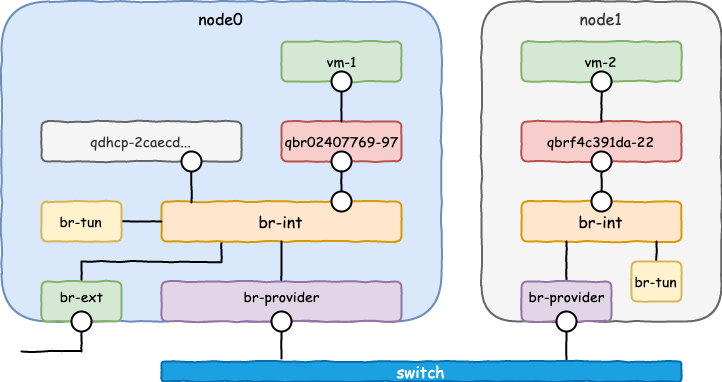

上篇文章梳理了分别在两个node上创建了VM后,底层的Linux系统上的namespace、linux bridge以及ovs中发生的事情。

本文将来着重关注两个node上的VM相互访问的流量通路。特别是令人头疼的ovs流表以及两个node是如何通过VXLAN网络将两台虚拟机连在了一起的。

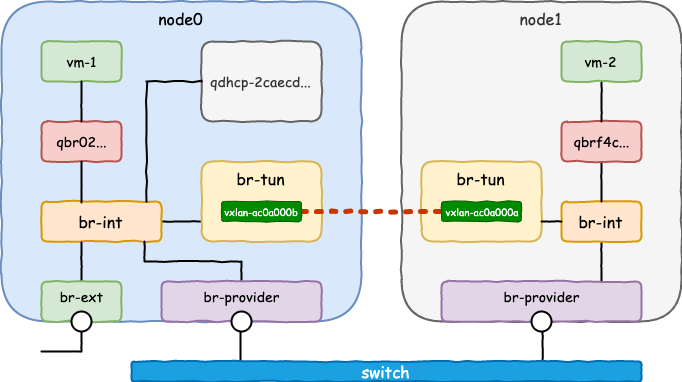

br-tun

在前面收集信息时,你或许已经关注到了linux bridge和ovs中还有其他变化,那么,来看看 br-tun

- ovs

1 | (node0)$ ovs-vsctl show |

当环境搭建完毕后,并不会立即创建这个接口,而是当创建了虚拟机后,会创建VXLAN的VTEP接口。

流表

node0

- br-int

1 | $ ovs-ofctl dump-flows br-int |

table 0

- 从

qvo02407769-97送入的icmp,arp报文,送往table 24 - 从

qvo02407769-97送入其他报文送往table 25 - 其他报文送table 60

table 24

- 从

qvo02407769-97送入的报文,送往table 60。fe80::f816:3eff:fead:669e是vm-1的IPv6地址 - 从

qvo02407769-97送入的arp报文,arp源地址为200.0.0.219即vm-1来的报文,送往table 25

table 25

从qvo02407769-97送入的报文,源mac为fa:16:3e:ad:66:9e即vm-1来的报文,送往table 60

table 60

正常转发

综上,从vm-1来的报文以及其他报文,都将正常在

br-int上转发

- br-tun

1 | $ ovs-ofctl dump-flows br-tun |

table 0

- 从

br-int来的报文(即需要从本节点送出的报文),送到table 2 - 从

vxlan-ac0a000b送来的报文(即从其他节点送到node 0的),送到table 4

table 2: 将要送出本节点的报文

- 送往table 20

- 送往table 22

table 4: 从其他节点送到本节点的报文

tun_id为15(0xf)的报文,打上vlan标签1,送往table 10

即从vxlan的tunnel出来的报文,如果tunnel id是15,则报文设置vlan为1

而

qvo02407769-97(与vm-1相连)和tap17a89323-fd(DHCP的tap接口)的vlan tag正是1

table 10

learn(table=20,hard_timeout=300,priority=1,cookie=0x9c915752f14d2544,NXM_OF_VLAN_TCI[0…11],NXM_OF_ETH_DST[]=NXM_OF_ETH_SRC[],load:0->NXM_OF_VLAN_TCI[],load:NXM_NX_TUN_ID[]->NXM_NX_TUN_ID[],output:OXM_OF_IN_PORT[]),output:“patch-int”

这个action是如此的复杂,但不看learn()中的内容,报文最终被送往了br-int

learn则是在table20中增加对于回程报文的转发规则

table 20

送往table 22

table 22

VLAN tag为1的报文,去掉VLAN,设置tun_id为15(0xf)后,送往vxlan-ac0a000b

综上:

- 从vm-1送来的报文,携带VLAN tag为1,去掉VLAN tag,设置tun_id为15,从vxlan接口送出

- 从其他节点送来的报文,如果tun_id为15,设置VLAN tag为1,送往br-int

总结

完整的分析了node0上的流表,node1上的流表内容基本相似,就不再展开。

至此,跨节点的虚拟机相互访问的实验及分析正式完结。你会发现

- 每一个port在连接到虚拟机的时候,都创建了一个网桥

- 每个虚拟机连在br-int上的接口,都按照subnet分配了一个VLAN tag,且每个节点的并不相同

- 当虚拟机的报文要送出/进入当前节点时,会有VLAN tag和VXLAN的tun_id相互转换

现在,思考一下:

- node1上的vm-1是如何通过DHCP获取IP地址的?

- 为什么虚拟机不直接连在br-int上,而是要通过一个linux bridge连接到br-int上呢?